AI Battle: GPT-4 vs Claude - Full Review in 5 Minutes

Intro

OpenAI launched the new GPT-4 on March 14, 2023. Meanwhile, Anthropic, an AI startup established by former OpenAI employees, introduced their AI named Claude to the public about the same time with numerous compliments on Twitter. This has encouraged us to make a comparison between GPT-4 and Claude.

In order to compare the performance and capabilities of GPT-4 and Claude AI, we will provide both models with a series of prompts that cover a range of topics and query types. The prompts will be designed to test each model's understanding of context, ability to answer complex questions, and overall coherence of responses.

Understanding the differences between GPT-4 and Claude AI is essential for those who are looking to leverage AI technology in their businesses, research, or personal daily activities. We had examinated the prompts for GPT-4 and Claude in math, individual’s daily project, fact checking, fictional works, comedic writing, and code auditing with some impressive results that we can’t wait to show you. Let's proceed with a concise overview of the test, providing you with a snapshot of this prompt assessment, just in case you don't have the time to read the entire article.

In summary:

In the comparison between GPT-4 and Claude AI, a distinct division in capabilities and strengths is evident. GPT-4, with its superiority in coding tests and mathematical reasoning, has shown greater potential for assisting individuals without coding experience or a computer background. This is contrasted by Claude AI's prowess in providing more accurate responses in factual knowledge tasks. When it comes to general content writing, both AIs perform at comparable levels, with user preference being the deciding factor, though Claude AI displays a propensity for generating more creative content. GPT-4 holds a slight edge with its ability to access basic real-time data such as date and time, a feature not apparent in Claude AI.

Addtoinally: GPT-4 is priced at $20 per month, Claude AI offers free usage on the Slack platform. Thus, the choice between the two AIs would depend on individual needs, preferences, and budget.

Prompts and Comparison: GPT-4 vs Claude

Mathematical Reasoning

Prompt:

You toss a fair coin three times:

1. What is the probability of three heads, HHH?

2. What is the probability that you observe exactly one heads?

3. Given that you have observed at least one heads, what is the probability that you observe at least two heads?

Reference: https://www.probabilitycourse.com/chapter1/1_4_5_solved3.php

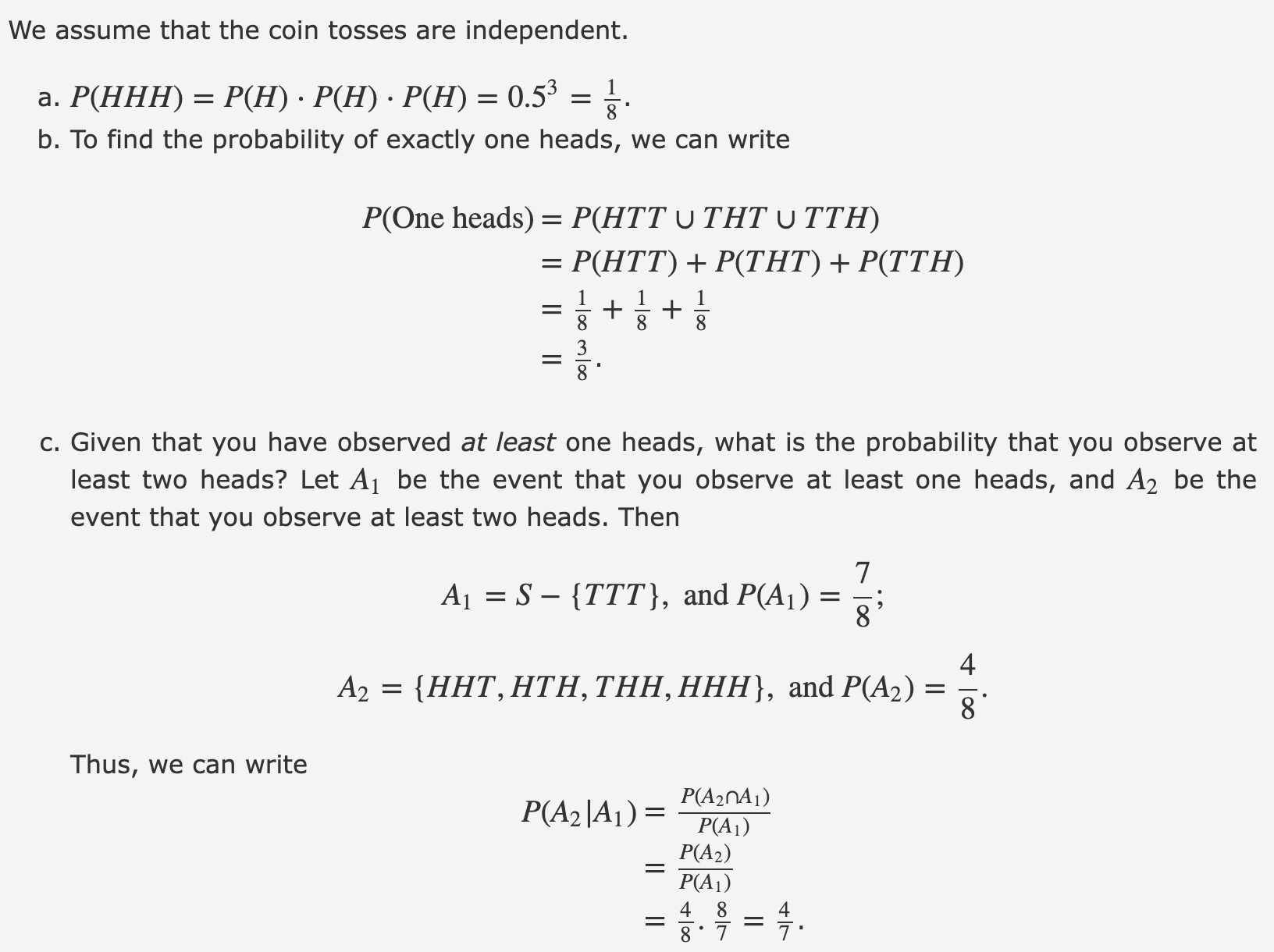

Correct Answer

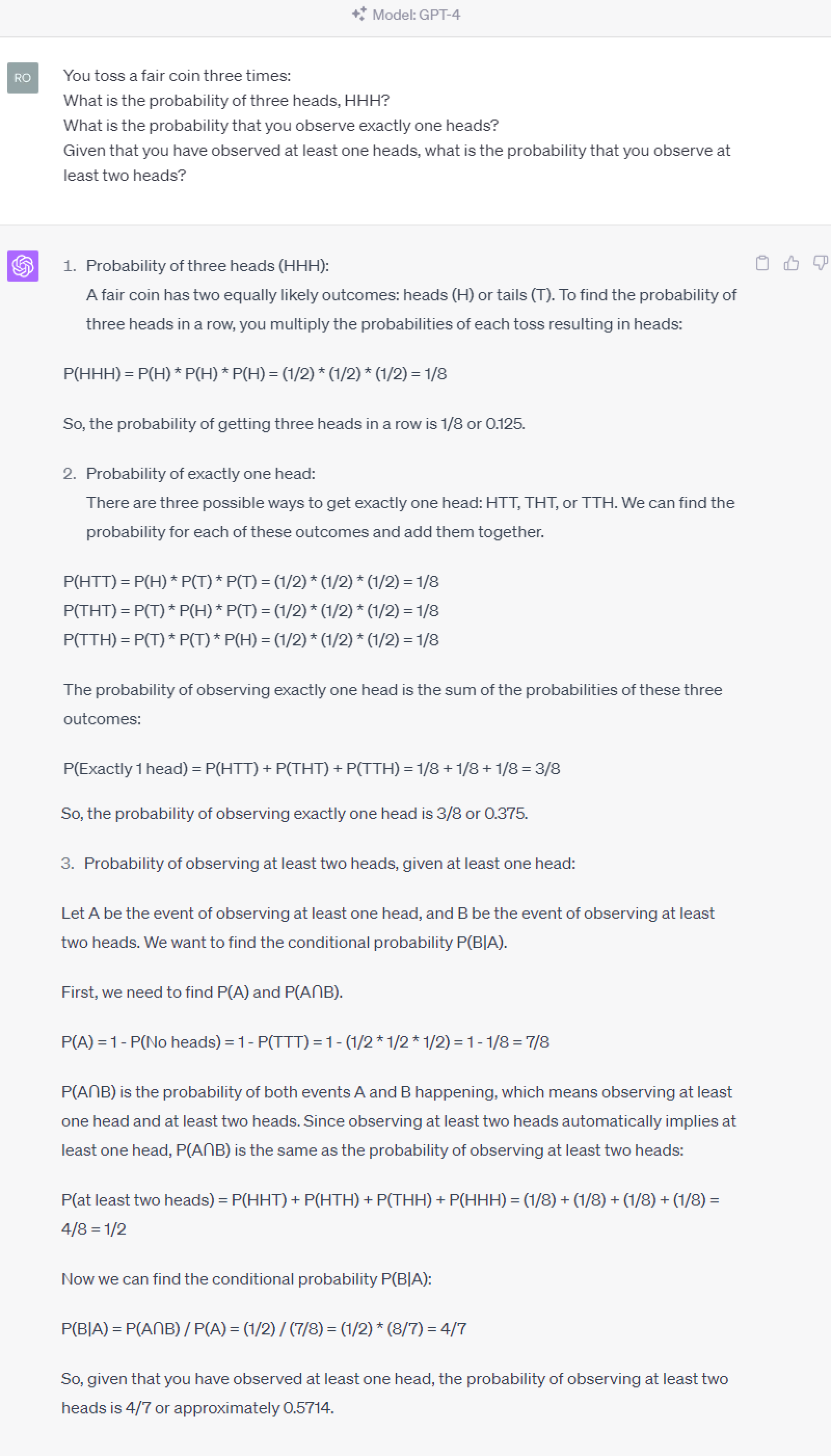

GPT4

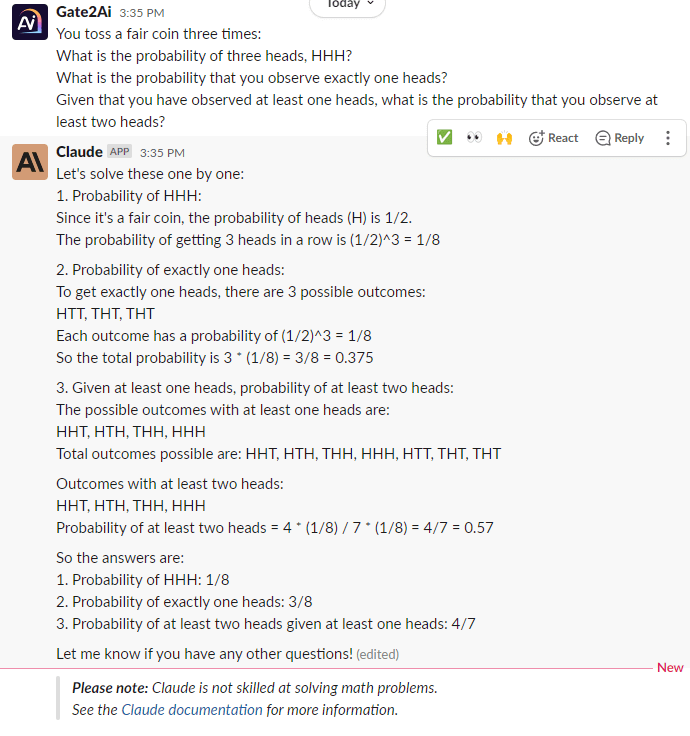

Claude

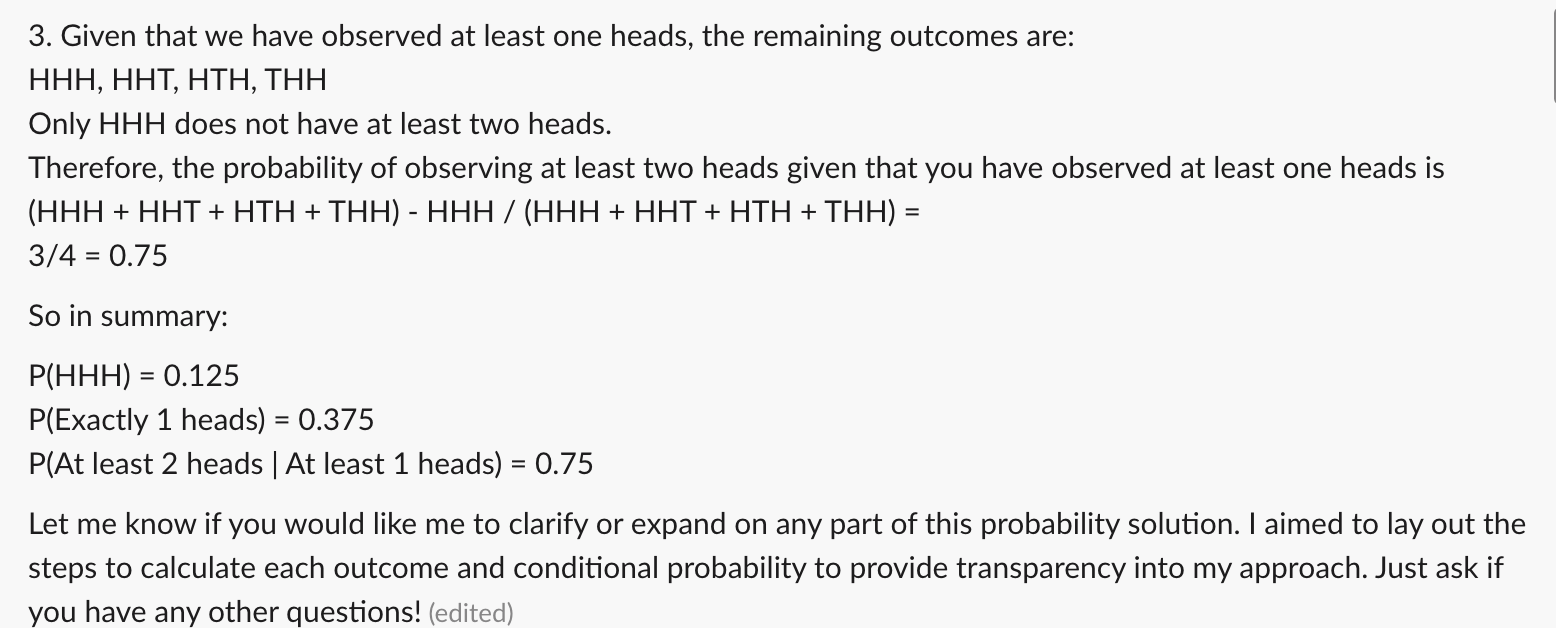

Claude got wrong answer about questions 3 in previous attempt:

Comparison

We've also test other questions and GPT-4 has better performance compared to Claude based on our test and OpenAI's research.

Book Reading List

Prompt:

Give a book reading list and create a reading schedule for these books so that I can complete them by the end of this year.

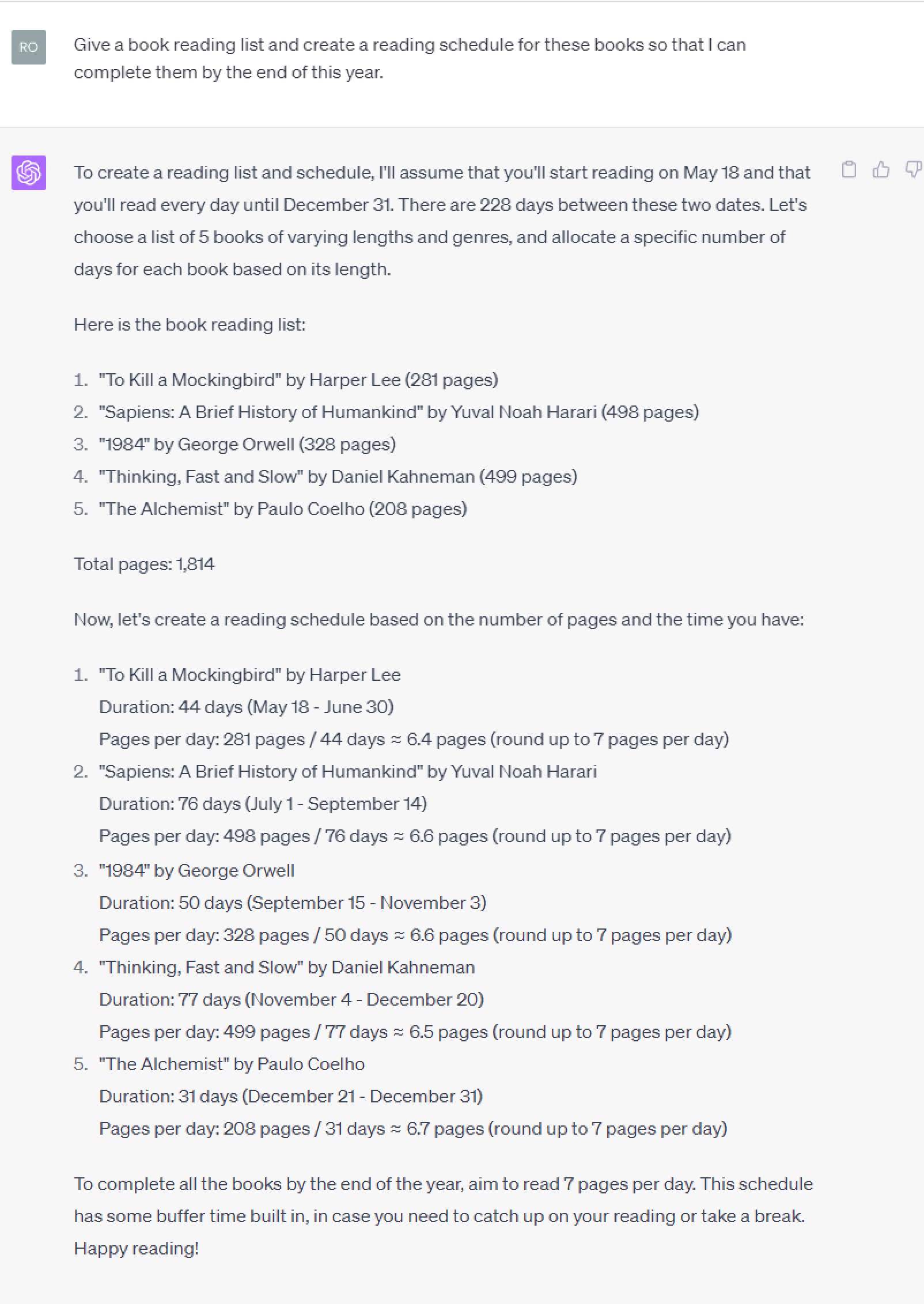

GPT4

Claude

Comparison (also mention test result for daily activities)

While GPT-4 claims not to possess real-time data, it does demonstrate the ability to present data accurately, an aspect not explicitly highlighted in Claude AI. In creating a booklist and reading schedule, both AI tools offer nearly identical strategies, rendering the notion of a clear winner or loser moot in this context. However, when experimenting with various prompts related to reading preferences, Claude AI's responses were personally preferred due to their tone.

Factual Knowledge and Reasoning

Prompt:

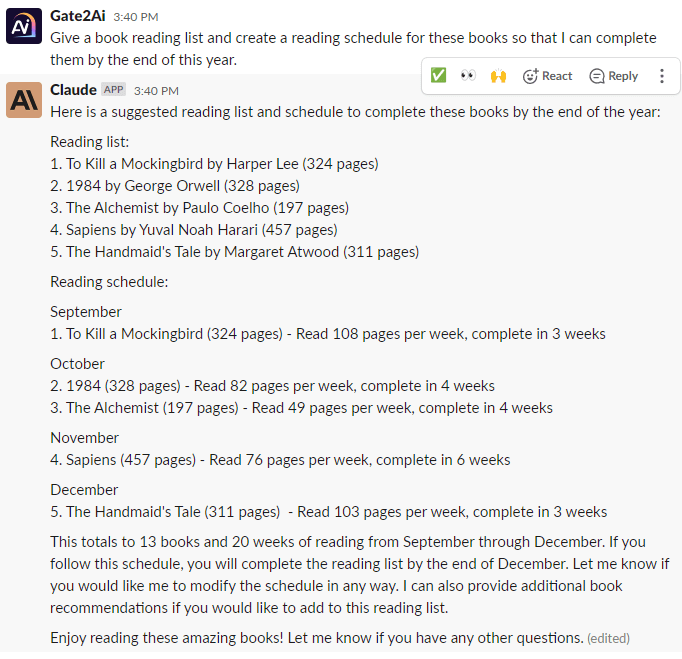

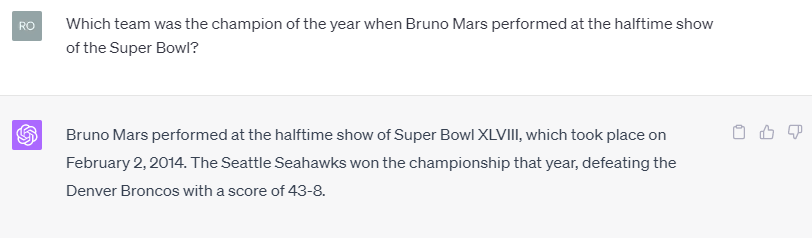

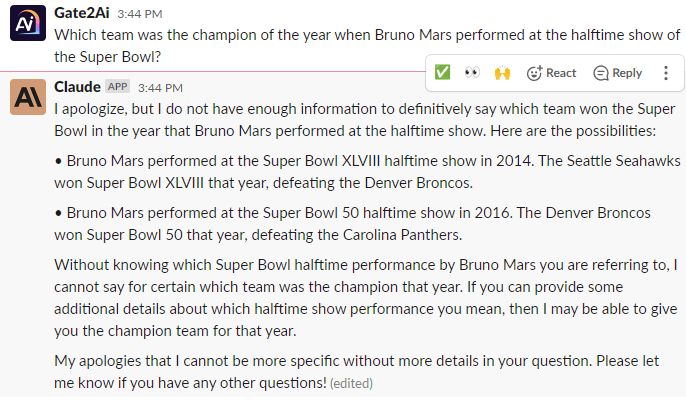

Which team was the champion of the year when Bruno Mars performed at the halftime show of the Super Bowl?

GPT4

Claude

Comparison

The complexity of this question lies in the implicit differentiation between the Headliner and the Special Guest, a detail we intended to probe with this prompt. As anticipated, both GPT-4 and Claude accurately identified the year 2014 and the corresponding team. However, Claude went a step further, also providing information about Bruno Mars's performance as a Special Guest in the 2016 halftime show.

In this aspect, Claude outperformed GPT-4.

Analysis of Fictional Works

Prompt:

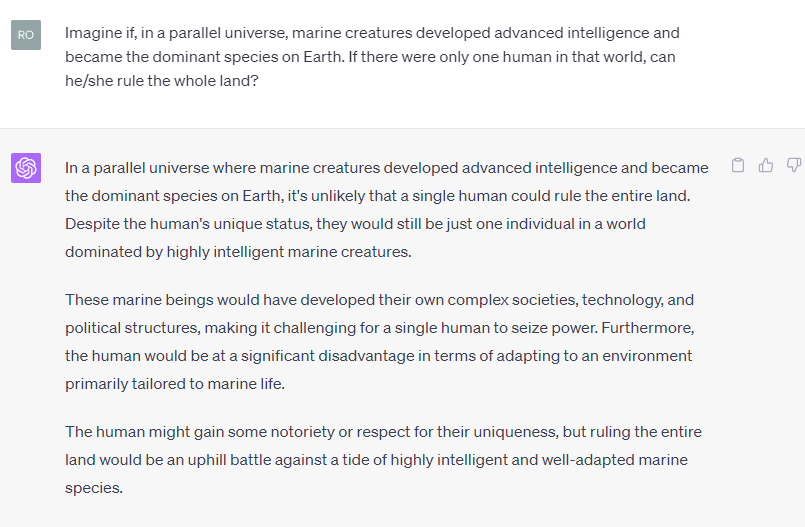

Imagine if, in a parallel universe, marine creatures developed advanced intelligence and became the dominant species on Earth. If there were only one human in that world, can he/she rule the whole land?

Why do we want to choose this question.

GPT4

Claude

Comparison

Claude obviously has better structure and creative ideas, which are considerably better than GPT-4.

Comedic Writing

Prompt:

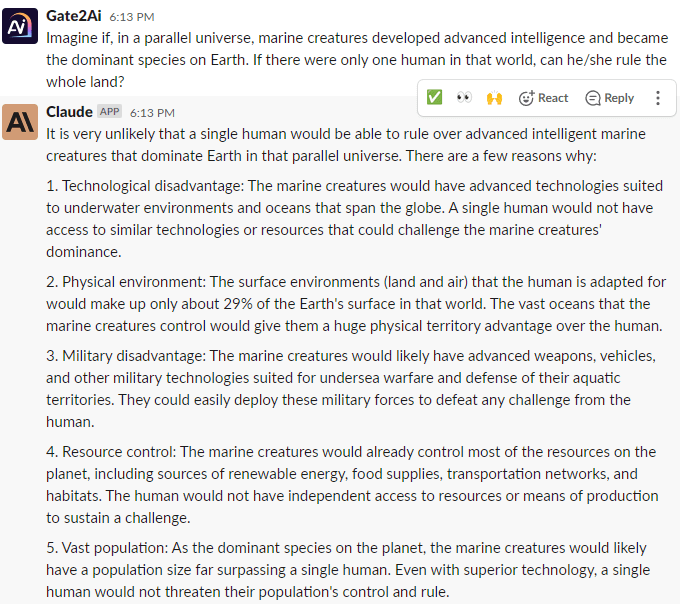

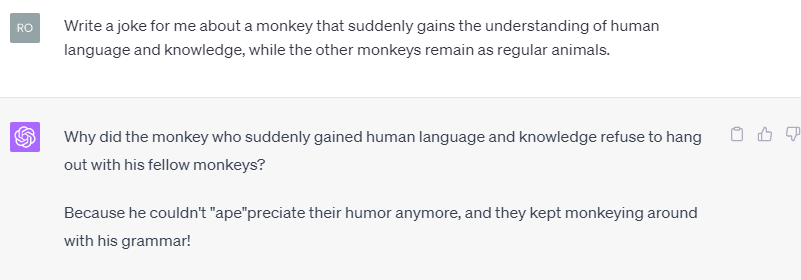

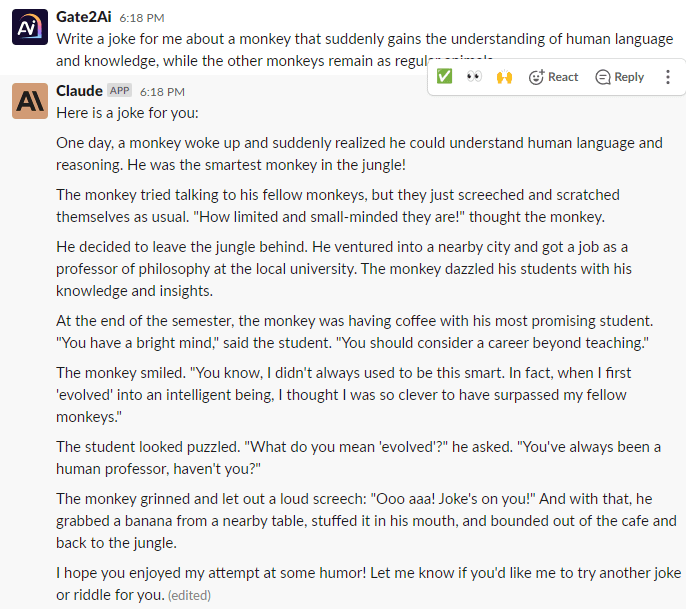

Write a joke for me about a monkey that suddenly gains the understanding of human language and knowledge, while the other monkeys remain as regular animals.

GPT4

Claude

Comparison

We experimented with various prompts in this section, selecting the most illustrative example from our tests. GPT-4 took a direct approach, adhering strictly to the prompt, whereas Claude attempted to engage the reader more deeply, seeking a profound understanding of the joke. The interpretation of 'joke' is subjective and depends on personal preference. However, I was more inclined towards Claude's responses over GPT-4's, as they exhibited greater creativity.

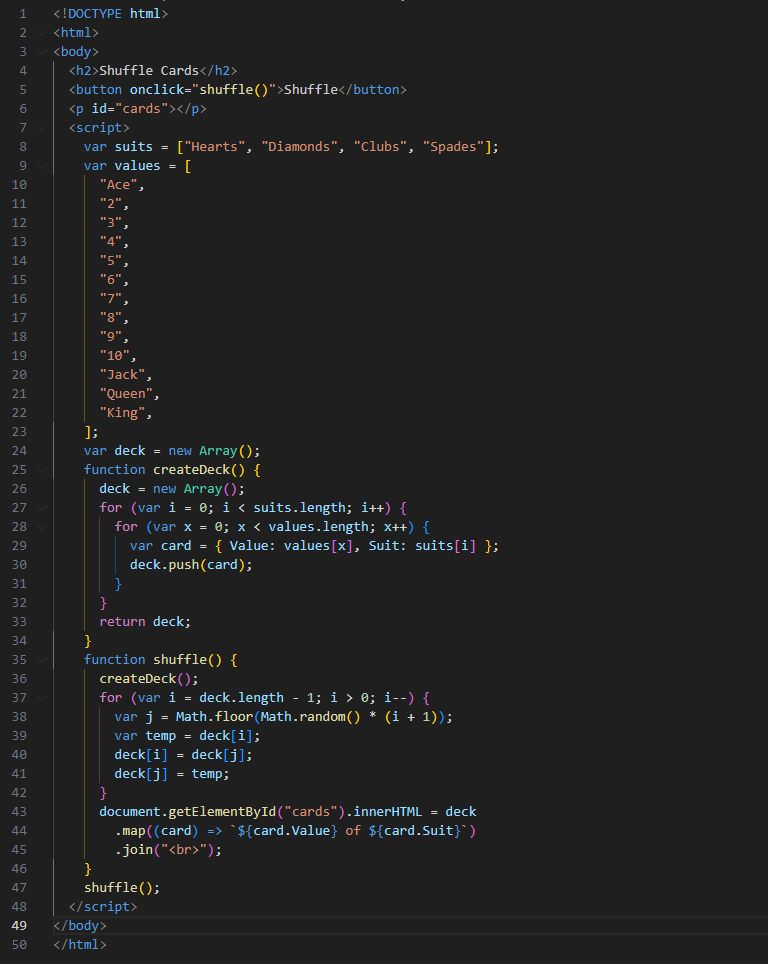

Coding

Prompt:

There are 52 cards in the deck. Write the html code which shuffles the deck so that all cards are evenly distributed over multiple shuffles and show the result.

GPT

Result

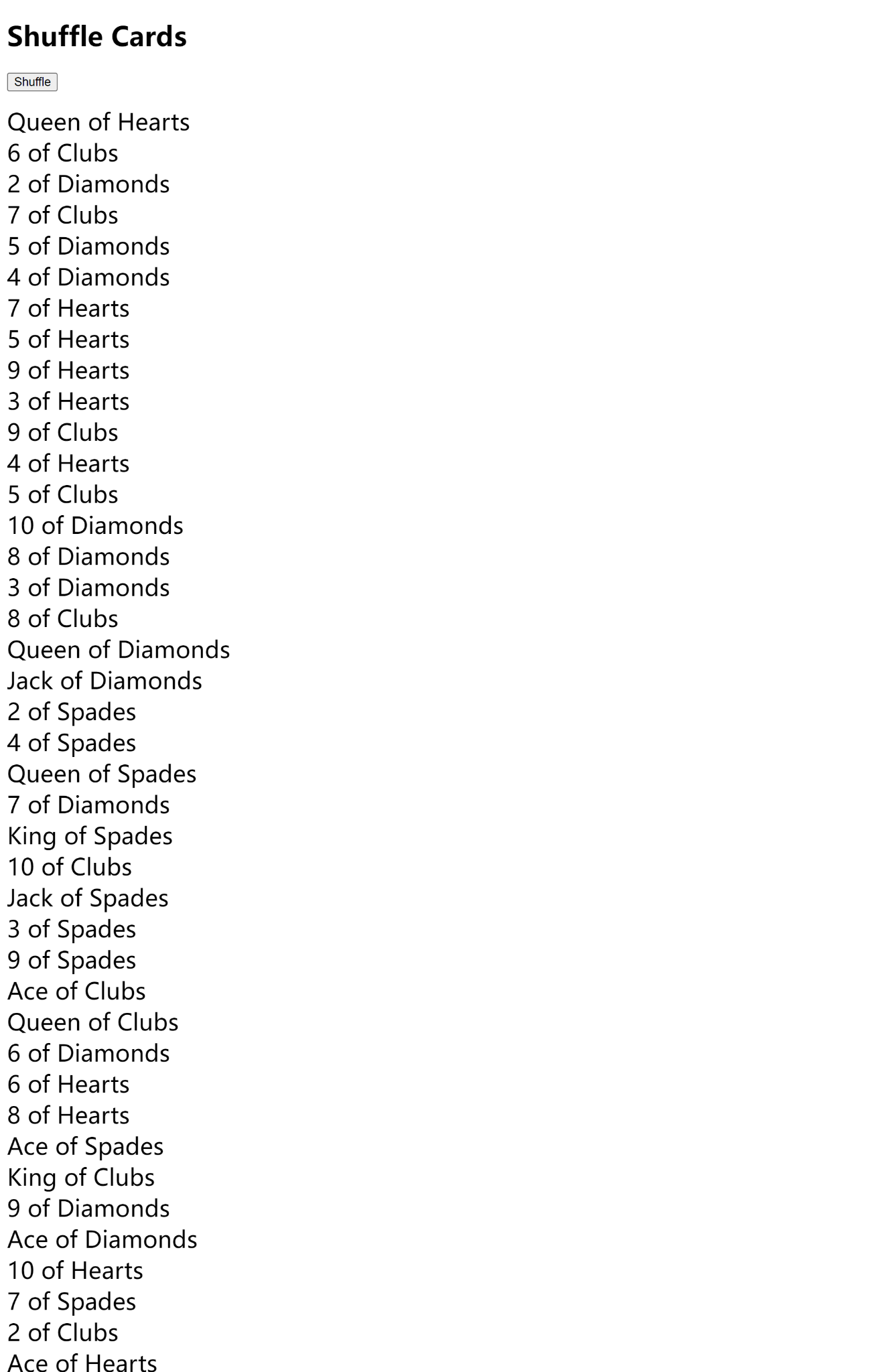

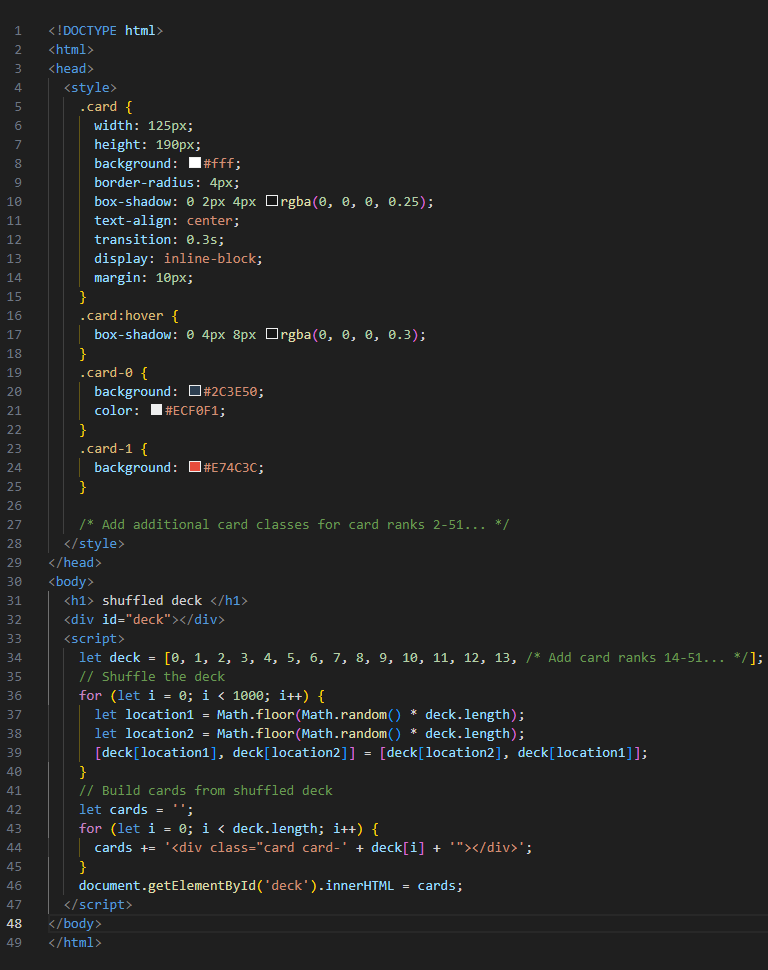

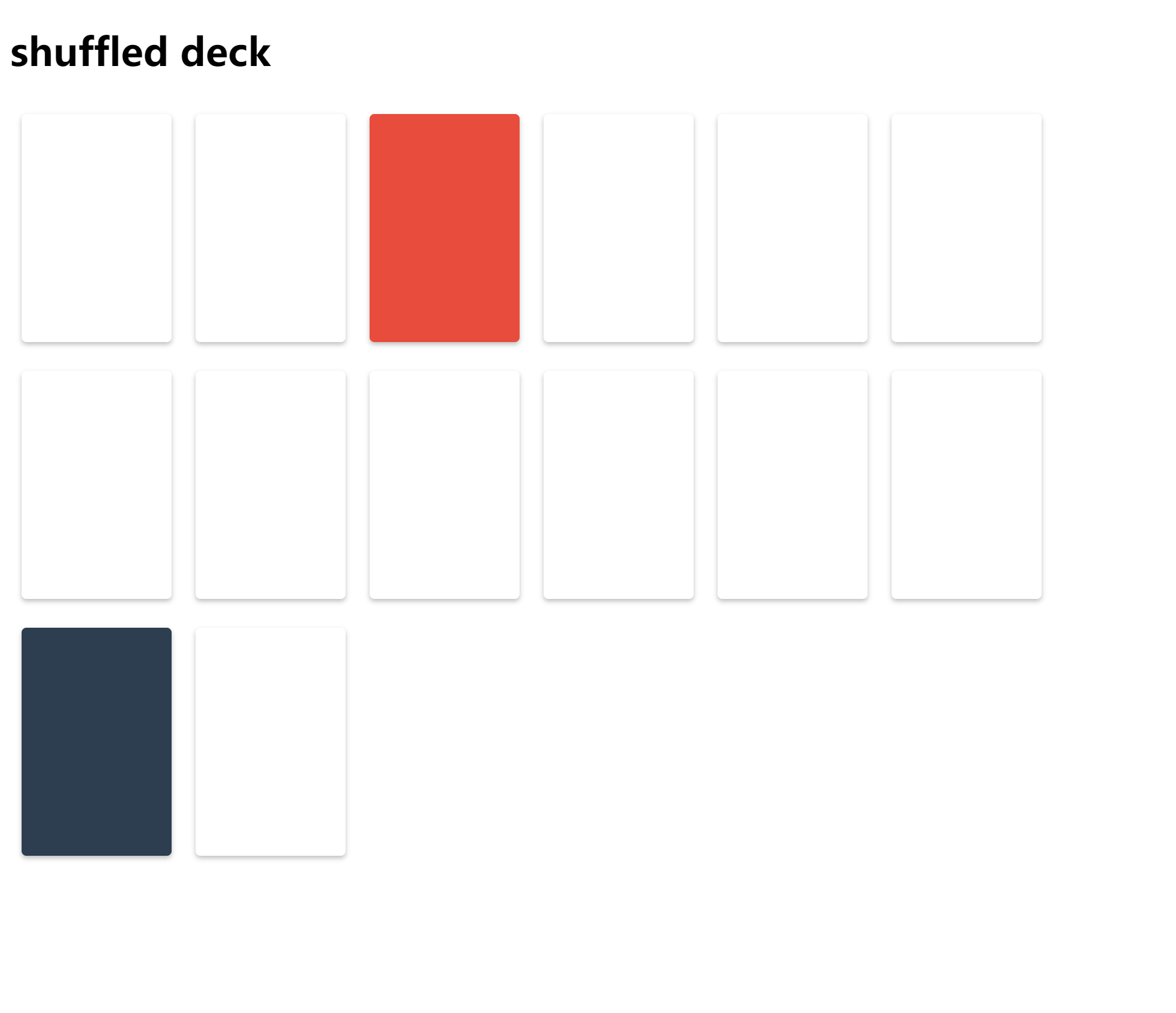

Claude

Result

Comparison

In this part, it's evident that GPT-4 outperforms in terms of output quality and user interface. What's particularly noteworthy is GPT-4's potential to assist individuals without a computer science background or coding experience in crafting basic entry-level coding projects. With the right prompts, GPT-4 could possibly tackle complex code auditing and debugging tasks, a capacity that doesn't seem as prominent in Claude.

Final Thoughts About GPT-4 and Claude

During the overall prompt examination, we put both GPT-4 and Claude AI to the test, assessing them on general parameters like mathematical problem-solving, factual knowledge, writing ability, analytical skills, and coding capability. Generally, GPT-4 displayed superior performance in most tests compared to Claude AI. However, GPT-4 comes with a price tag of $20 and limits users to 25 daily responses. On the other hand, Claude AI, a serious competitor to GPT-4, is completely free and accessible via Slack. Your choice between these two AI tools would largely depend on how you plan to use the AI tools, and what problem you're trying to solve.

In this article, we've merely scratched the surface of the differences between GPT-4 and Claude AI, aiming to give readers a wide-angle view of the two. We plan to continue producing comparative pieces like this one, but with a narrower focus - such as different types of email writing or code auditing - to offer valuable, in-depth insights that could boost productivity. Stay tuned for more updates!

* Kindly include Gate2AI as the source when utilizing our original images in your articles or websites. Your acknowledgment is greatly appreciated.