Over the past few years, a new form of artificial intelligence called generative AI has rapidly advanced to produce astonishing results. At the forefront of this technology are neural network architectures like Generative Pre-trained Transformers, better known as GPT.

But what exactly is GPT, and what makes it different from other AI systems? This article will explore the capabilities and limitations of these state-of-the-art natural language models to shed light on how they work and what the future may hold as they continue to evolve.

What is GPT?

GPT stands for Generative Pre-trained Transformer, a leading-edge machine learning framework synonymous with groundbreaking advances in natural language processing (NLP).

Marking a significant transition from AI systems that merely analyze language to those capable of creating original, dynamic text, GPT employs neural networks and deep learning. The resulting product is an AI text generation strikingly similar to human writing. This departure from conventional rule-based NLP systems signifies a dramatic evolution in artificial intelligence capabilities.

How Exactly Does GPT Work?

Transformers

GPT models leverage an advanced neural network architecture called transformers. First introduced in 2017, transformers represent a significant evolution beyond previous recurrent and convolutional networks for natural language processing.

Transformers utilize a mechanism called self-attention, which gives the model the remarkable ability to analyze words based on their context within a sentence. This mechanism contrasts with recurrent networks that process words sequentially and isolate them from the surrounding context.

Two core components underpin its transformer architecture:

Encoders: The encoder layer preprocesses the input text into embeddings, which assigns a vector representation to each word based on its position and significance. This encoding allows GPT to interpret semantic meaning based on word order. That is to say; the model can learn nuanced relationships between words based on their order and proximity rather than simply analyzing words in isolation.

Decoders: This layer takes the embedded input from the encoder to predict the next most likely word in the sequence. It uses self-attention to focus on the most relevant parts of the input when generating each word.

Large Datasets

During training, GPT continuously loops through these encoding and decoding steps while reviewing large text datasets. Over thousands of iterations, the model finely tunes its internal parameters to predict subsequent text better.

Once trained, GPT uses this deep understanding of language to take prompts and generate natural responses word by word. The model focuses on the most relevant input words to determine the next most probable word in the sequence.

It acquires a statistical model of language, not by adhering to inflexible rules but through a comprehensive training process. The strength of this approach lies in its versatility, enabling it to deal with a broad spectrum of conversational prompts and subjects. It achieves this by leveraging its deep understanding of human speech and writing patterns.

Evolution of OpenAI GPT Models

Generative Pre-trained Transformer models have seen rapid advancement, mainly due to significant improvements to the transformer architecture and increased model size. In addition, the growth of datasets has also played a crucial role in enhancing their capabilities. Currently, the models have been updated to GPT-4. The following is a detailed introduction.

GPT-1: The Original Pioneering Architecture

First introduced in 2018

117 million parameters

This first version relied solely on unsupervised pre-training without any task-specific fine-tuning. Despite its modest scale, the first generation showed the potential of generative pre-training for NLP.

GPT-2: Massive Scaling Unlocks New Horizons

Released in 2019

1.5 billion parameters

10x larger dataset than its predecessor

GPT-2 widened the horizon for generative AI by increasing the model size 10-fold to 1.5 billion parameters. It ingested 40 GB of high-quality data from books and Wikipedia articles.

This massive scaling endowed this version with the finesse to generate cohesive paragraphs of text. Not only that, but it demonstrated competency in simple translation and summarization tasks, all through zero-shot learning.

GPT-3: The AI Boom Heard' Round the World

Unveiled in 2020

175 billion parameters

Over 45 terabytes of training data

GPT-3 profoundly impacted the world with its 175 billion parameters and a training regimen involving 570 GB of diverse data. Its capacity extended to producing articulate and well-structured text across several paragraphs.

The release of GPT-3 triggered an explosion of interest and investment into generative AI. Its natural language capabilities were so impressive that many proclaimed it could revolutionize human interaction with machines.

GPT-3.5: Edging Toward Common Sense

Introduced in 2022

Includes "text-davinci-002" and "code-davinci-002" models

More capable than its predecessor

Seeking further improvements, GPT-3.5 refined and expanded the model training approach. Changes included augmented data processing and utilizing reinforcement learning from human feedback.

These advancements enhanced its conversational abilities while reducing harmful biases and flaws. The model displayed improved comprehension, reasoning, and common sense.

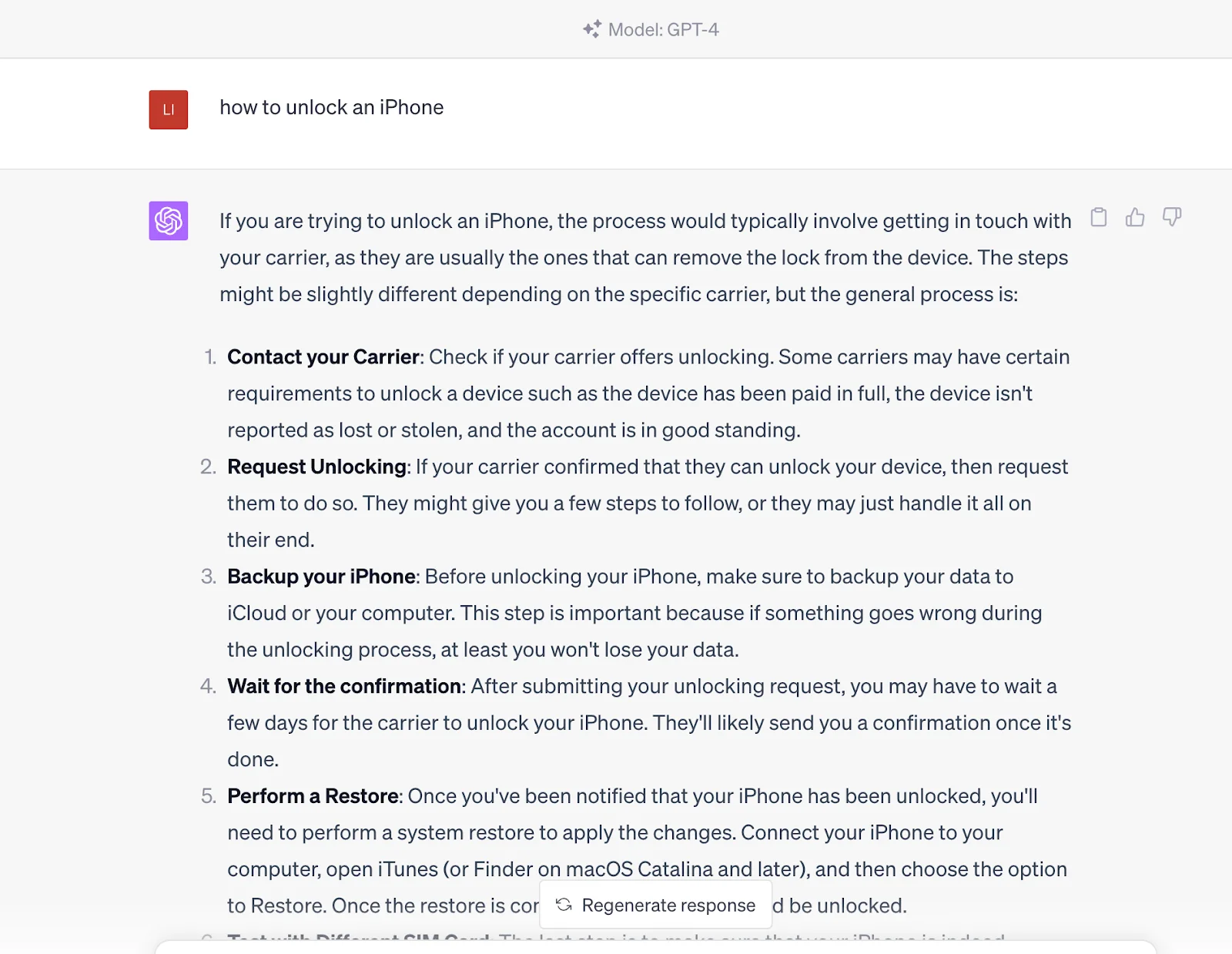

GPT-4: Pushing the Boundaries Again

Announced in March 2023

Multimodal - can process images and text

Outperforms GPT-3.5 in reasoning and problem-solving tests

The latest GPT-4 model brings another leap forward as the first version capable of ingesting text and images. This feature allows it to interpret and comment on visual inputs using natural language.

It showcases heightened creativity, the aptitude for writing computer code, and impressive performance on human-designed examinations. Similar to its predecessors, GPT-4 exemplifies the unceasing evolution of generative AI. It's clear that there's still a vast landscape of untapped potential in this area to investigate further.

How to Use GPT?

Generative Pre-trained Transformer models owe their versatility to their innovative transformer architecture, which equips them as adaptable tools with many applications. These state-of-the-art models can conform to numerous tasks, requiring only natural language prompts. This flexibility opens up a myriad of possibilities. Here are some illustrative examples:

Automating Written Content Across All Styles

One of the most popular applications is using GPT for various writing and content generation tasks. The models can produce the following:

long-form articles;

short-form social media posts;

poetry;

jokes;

lyrics;

emails;

code and more based on creative prompts.

The progress seen in models such as GPT-3 now allows for the generation of considerably extended content without compromising grammar, structure, or logical coherence. This technological breakthrough lays the groundwork for automating various tasks, catering to numerous sectors and needs.

Building Smarter Chatbots and Virtual Assistants

GPT shines in natural language processing, making it ideal for conversational AI applications. The models can chat fluently on nearly any topic while answering follow-up questions with remarkable coherence.

You can integrate the models into chatbots, virtual assistants, customer service robots, and similar tools, resulting in more natural feelings and conversations. It can also integrate contextual clues to have smarter dialogues.

Analyzing Data and Documents

Despite its reputation for text generation, the models exhibit prowess in the ingestion, comprehension, and analysis of information. With the proper prompts, they can swiftly sift through datasets or documents, extracting crucial data points and relationships. Furthermore, they are adept at discerning valuable insights, demonstrating their versatility and usefulness.

GPT's statistical learning empowers it to pinpoint topics and keywords, condense lengthy reports into easily understandable segments, and detect patterns across divergent data sources. These features significantly enhance the scope of analysis work. Consequently, they support scientists, analysts, and researchers in their work.

Coding and Technical Writing

With its prowess for pattern recognition, GPT can generate functional computer code from simple natural language descriptions of desired functions. The models have produced working code in languages like Python, JavaScript and even complex programs like a React web app.

Similarly, it makes an adept technical writer and translator. Engineers can use the models to automatically generate technical documentation, API explanations, user manuals, and release notes from brief English prompts.

Virtually any application involving text or language is fertile ground for GPT integration. The models keep expanding the boundaries of what's possible in linguistics. As it advances, it may find uses beyond anything technologists have envisioned.

Limitations and Concerns of GPT

While representing an enormous leap forward, Generative Pre-trained Transformer models still have notable limitations.

Susceptibility to Hallucination and Misinformation

A core limitation is that those models frequently "hallucinate" - generating false or nonsensical text without a factual basis. This happens because the models recognize patterns but don't truly comprehend the content.

The hallucinated text sounds convincing but lacks veracity. If you take the hallucinatory output as ground truth, you risk spreading misinformation. More work is needed to enhance fact-checking abilities.

Reflecting Training Data Biases

Since GPT mimics patterns in training data, it tends to inherit and amplify any biases in the source material. For instance, models trained on text with societal biases may output prejudiced or toxic language.

To tackle biases, we need to diversify our training data and apply an ethical approach to mitigation. Despite advancements, bias continues to be a challenge that requires constant attention.

Transparency and Explainability

Details on 's training procedures, structure, and datasets used remain largely undisclosed, leaving little room for independent researchers to examine the system thoroughly. An inclusive understanding of the model's limitations, biases, abilities, and commercial objectives is essential. Therefore, it's critical for increased transparency and accountability in the technical execution of the model.

Possibilities of Misuse and Harms

The potential for misusing GPT models to spread misinformation, spam, hate speech, and social engineering scams remains a threat. However, the creators have implemented safeguards to minimize harm, like blocking dangerous or unethical output.

More guardrails focused on safety, ethics, and principles of AI alignment must accompany ongoing advances. Overall, the pace of progress continues to outstrip governance solutions.

Environmental and Economic Concerns

Training and running advanced neural networks consumes enormous computing resources. Generating Generative Pre-trained Transformer models cost millions of dollars and tons of greenhouse gas emissions tied to energy use.

Managing the ensuing environmental impact and democratizing access to leading-edge models remain challenges. However, solutions like energy-efficient hardware and optimized code can help.

What's Next in the Evolution of GPT?

While progress has been astounding, Generative Pre-trained Transformers still need to achieve true human-level intelligence. Moving forward, researchers aim to enhance contextual reasoning, semantic understanding, and general knowledge about the world.

There is also an increased focus on aligning the goals and values of advanced AI with human ethics. More transparent development and vetting processes could reduce misuse and bias risks while allowing more comprehensive research access.

Its capabilities will continue rapidly advancing alongside exponential growth in data and computation. This steady progress hints at a fascinating future where sufficiently advanced AI may someday pass the Turing test as indistinguishable from human conversation.

Conclusion

GPT represents a monumental AI creativity advancement, unlocking new potential across countless applications. But as these models continue rapidly evolving, developers must address lingering limitations around bias, safety, and transparency. Generative Pre-trained Transformer

promises to expand how humans and machines interact, create, and communicate if paired with ethical governance. Let us wait and see!